|

| Easy to say. |

How do you get people to do the right thing? One way is to hope that morality, religion, or some such concept will do the trick. In academia, however, the answer is often testing and measurement. Students take exams, faculty are evaluated by citations, number of publications, number of publications in journals somehow ranked by prestige, etc.

Unfortunately, all evaluation and incentive systems have flaws and create incentives to cheat. They also have costs of operation. Exams must be designed and scored. Journals depend on time-consuming peer reviews, editorial time, etc.

A recent scandal at Stanford illustrates the point. From the NY Times:

The Research Scandal at Stanford Is More Common Than You Think

July 30, 2023 (The original contains photos of manipulated images.)

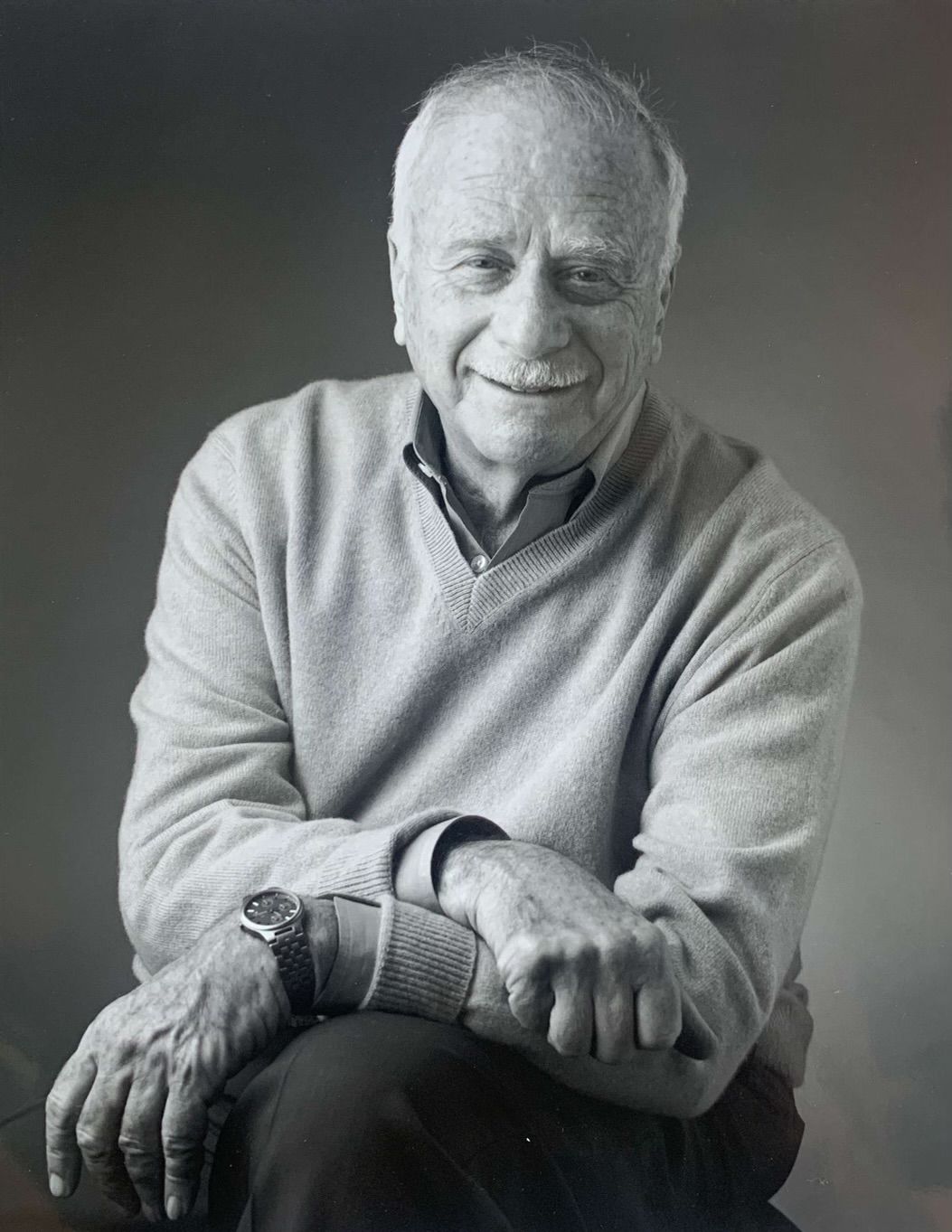

By Theo Baker. Theo Baker is a rising sophomore at Stanford University. He is the son of Peter Baker, the chief White House correspondent for The Times. At its daily student newspaper, he won a George Polk Award for investigating allegations of manipulated experimental data in scientific papers published by the university’s president.

===

There are many rabbit holes on the internet not worth going down. But a comment on an online science forum called PubPeer convinced me something might be at the bottom of this one. “This highly cited Science paper is riddled with problematic blot images,” it said. That anonymous 2015 observation helped spark a chain of events that led Stanford’s president, Marc Tessier-Lavigne, to announce his resignation this month.

Dr. Tessier-Lavigne made the announcement after a university investigation found that as a neuroscientist and biotechnology executive, he had fostered an environment that led to “unusual frequency of manipulation of research data and/or substandard scientific practices” across labs at multiple institutions. Stanford opened the investigation in response to reporting I published last autumn in The Stanford Daily, taking a closer look at scientific papers he published from 1999 to 2012.

The review focused on five major papers for which he was listed as a principal author, finding evidence of manipulation of research data in four of them and a lack of scientific rigor in the fifth, a famous study that he said would “turn our current understanding of Alzheimer’s on its head.” The investigation’s conclusions did not line up with my reporting on some key points, which may, in part, reflect the fact that several people with knowledge of the case would not participate in the university’s investigation because it declined to guarantee them anonymity. It did confirm issues in every one of the papers I reported on. (My team of editors, advisers and lawyers at The Stanford Daily stand by our work.)

In retrospect, much of the data manipulation is obvious. Although the report concluded that Dr. Tessier-Lavigne was unaware at the time of the manipulation that occurred in his labs, in papers on which he served as a principal author, images had been improperly copied and pasted or spliced; results had been duplicated and passed off as separate experiments; and in some instances — in which the report found an intention to hide the manipulation — panels had been stretched, flipped and doctored in ways that altered the published experimental data. All of this happened before he became Stanford’s president. Why, then, didn’t it come out sooner?

The answer is that people weren’t looking.

This year, a panel of scientists began reviewing the allegations against Marc Tessier-Lavigne, focusing on five papers for which he was a principal author.

In the earliest paper reviewed, a 1999 study about neural development, the panel found that an image from one experiment had been flipped, stretched and then presented as the result of a different experiment.

A 2004 paper contained similar manipulations, including an image that was reused to represent different experiments.

“Basic biostatistical computational errors” and “image anomalies” were found in a 2009 paper about Alzheimer’s that has been cited over 800 times, including the reuse of a control image with improper labeling.

At least four of the five papers appear to have manipulated data. Dr. Tessier-Lavigne has stated that he intends to retract three of the papers and correct the other two.

The report and its consequences are an unhappy outcome for a powerful, influential, wealthy scientist described by a colleague in a 2004 Nature Medicine profile as essentially “being perfect.” The first in his family to go to college, Dr. Tessier-Lavigne earned a Rhodes scholarship before establishing a lab at the University of California, San Francisco, in the 1990s and discovering netrins, the proteins responsible for guiding axon growth. “He’s one of those people for whom things always seemed to go just right,” an acquaintance recalled in the Nature Medicine article.

Anonymous sleuths had raised concerns of alteration in some of the papers on PubPeer, a web forum for discussing published scientific research, since at least 2015. These public questions remained hidden in plain sight even as Dr. Tessier-Lavigne was being vetted for the presidency of Stanford, an institution with a budget of $8.9 billion for next year — larger than that of the entire state of Iowa. Reporters did not pick up on the allegations, and journals did not correct the scientific record. Questions that should have been asked, weren’t.

Peer review, a process designed to ensure the quality of studies before publication, is based on a foundation of honesty between author and reviewer; that process has often failed to catch brazen image manipulation. And when concerns are raised after the fact, as they were in this case, they often fail to gain public attention or prompt correction of the scientific record.

This is a major issue, one that extends well beyond one man and his career. Absent public scrutiny, journals have been consistently slow to act on allegations of research falsification. In a field dependent on good faith cooperation, in which each contribution necessarily builds on the science that came before it, the consequences can compound for years.

When we first went to Dr. Tessier-Lavigne with questions in the fall, a Stanford spokeswoman responded instead, claiming the concerns raised about three of his publications “do not affect the data, results or interpretation of the papers.” But as the Stanford-sponsored investigation found and he eventually came to agree, that was not true.

Dr. Tessier-Lavigne’s plan to retract or issue robust corrections for at least five papers for which he was a principal author is a rare act for a scientist of his stature. It seems unlikely this would have happened without the public pressure of the past eight months; in fact, the report concluded that “at various times when concerns with Dr. Tessier-Lavigne’s papers emerged — in 2001, the early 2010s, 2015-16 and March 2021 — Dr. Tessier-Lavigne failed to decisively and forthrightly correct mistakes in the scientific record.”

The Stanford investigation did not find that Dr. Tessier-Lavigne personally altered data or pasted pieces of experimental images together. Instead, it found that he had presided over a lab culture that “tended to reward the ‘winners’ (that is, postdocs who could generate favorable results) and marginalize or diminish the ‘losers’ (that is, postdocs who were unable or struggled to generate such data).” In a statement, Dr. Tessier-Lavigne said, “I can state categorically that I did not desire this dynamic. I have always treated all the scientists in my lab with the utmost respect, and I have endeavored to ensure that all members flourish as successful scientists.”

Winner-takes-all stakes are, unfortunately, an all-too-common occurrence in academic science, with postdoctoral researchers often subject to the intense pressure of the need to publish or perish. Having a paper with your name on it in Nature, Science or Cell, the high-profile journals in which many of the papers reviewed by the Stanford investigation appeared, can make or break young careers. Postdocs are underpaid; Stanford recently purchased housing that was intended to be affordable for them, then reportedly set minimum salary requirements for living there higher than their wages. They are also jockeying to stand out in a field with limited lab positions and professorship openings. And senior researchers sometimes take credit for their postdocs’ work and ideas but brush off responsibility should errors or mistakes arise.

What isn’t common, of course, is the “frequency of manipulation of research data and/or substandard scientific practices” in the labs Dr. Tessier-Lavigne ran, the Stanford report concluded. Falsification, the technical term for much of this conduct, involves “violating fundamental research standards and basic societal values,” according to the National Academy of Sciences. In his statement, Dr. Tessier-Lavigne said that he has “always sought to model the highest values of the profession, both in terms of rigor and of integrity, and I have worked diligently to promote a positive culture in my lab.” Despite the report’s characterization of what went on in his labs as rare and irregular, lessons from this case apply across the field, especially regarding the importance of correcting the scientific record.

A 2016 study by a handful of prominent research misconduct investigators — including the well-known image analyst and microbiologist Elisabeth Bik, who helped identify a number of the manipulations in the work coming out of Dr. Tessier-Lavigne’s labs — showed that around 3.8 percent of published studies include “problematic figures,” with at least half of those showing signs of “deliberate manipulation.” But only approximately 0.04 percent of published studies are retracted. That gap, which shows a lack of accountability among both individual researchers and the scientific journals, is a profoundly unfortunate sign of a culture in which admitting failures has been stigmatized, rather than encouraged.

“My lab management style has been centered on trust in my trainees,” Dr. Tessier-Lavigne said in his statement. “I have always looked at their science very critically, for example to ensure that experiments are properly controlled and conclusions are properly drawn. But I also have trusted that the data they present to me are real and accurate,” he wrote.

Science is a team sport, but as the report concluded, Dr. Tessier-Lavigne, a principal author with final authority over the data, was responsible for addressing the issues in his research when they were brought to his attention. In the judgment of the Stanford investigation, he “could not provide an adequate explanation” for why he had not done so.

To his credit, he began the correction process for a few of these examples of data manipulation in 2015, when the first allegations were made publicly. But when Science failed to publish the corrections for two of those papers, the report found that after “a final inquiry on June 22, 2016, Dr. Tessier-Lavigne ceased to follow up.” He was made aware of the allegations once more when public discussion resumed in 2021. The report found that he drafted an email inquiring about the unpublished corrections but did not send it. He will now retract both those papers.

In another case — in which there was no public pressure to act — he was made aware “within weeks” of an error in a 2001 paper, according to the Stanford report. Although he wrote to a colleague that he would correct the scientific record, “he did not contact the journal, and he did not attempt to issue an erratum, which is inadequate,” the report concluded.

In the past few years, the field has made great strides to combat image manipulation, including the use of resources like PubPeer, better software detection tools and the prevalence of preprints that allow research to be discussed before it is published. Sites like Retraction Watch have also furthered awareness of the problem of research misconduct. But clearly, there is still progress to be made.

The shake-up at Stanford has already prompted conversations across the scientific community about its ramifications. Holden Thorp, the editor in chief of Science, concluded that the “Tessier-Lavigne matter shows why running a lab is a full-time job,” questioning the ability of researchers to ensure a rigorous research environment while taking on increasing outside responsibilities. An article in Nature examined “what the Stanford president’s resignation can teach lab leaders,” concluding that the case was “reinvigorating conversations about lab culture and the responsibilities of senior investigators.”

This self-reflection in the scientific research community is important. To address research misconduct, it must first be brought into the light and examined in the open. The underlying reasons scientists might feel tempted to cheat must be thoroughly understood. Journals, scientists, academic institutions and the reporters who write about them have been too slow to open these difficult conversations.

Seeking the truth is a shared obligation. It is incumbent on all those involved in the scientific method to focus more vigorously on challenging and reproducing findings and ensuring that substantiated allegations of data manipulation are not ignored or forgotten — whether you’re a part-time research assistant or the president of an elite university. In a cultural moment when science needs all the credibility it can muster, ensuring scientific integrity and earning public trust should be the highest priority.

Source: https://www.nytimes.com/2023/07/30/opinion/stanford-president-student-journalist.html.

===

Note that the last paragraph gets to the heart of the problem, but not necessarily in the way the author intended. He calls upon a "shared obligation" to prevent cheating. But a "shared obligation" is inherently a weak incentive. What is the cost to you as an individual scientist/academic of not doing your "share"? Even if you argue that science or academia as a whole would be better off if such scandals were prevented - if everyone did their share - you have a classic potential collective action failure here.

Changing technology has made it easier to look for cheating in this particular case. But the incentives to investigate were still largely directed toward someone outside the system (i.e., someone at the college newspaper). Moreover, it was the fact that the cheating involved the president of Stanford that provided sufficient incentive for the investigation. Most cheaters are not presidents of universities. And there is much less incentive to investigate cheating in articles that appear in less prestigious journals or which make only marginal contributions.

Note also that in the case of programs such as chatGPT writing student essays, technology can change in ways that make cheating easier. In short, honesty is not always perceived as being the best policy.